First one to say geom_ribbon loses. I was plotting some data for a colleague, had two lines (repeated experiment) per person (time on the x axis) facetted by id, I thought it’d be nice to shade the area between the two lines so that when they were deviating you’d see a large shaded area, and when they were close there would be little shading, just to aid the visual of the separation between repeats. I thought this would be trival, and geom_ribbon would do the trick, alas, some of the lines crossed so that didn’t pan out. Ignoring the ‘experiment/order’ variable and reordering the data to track the max and min values at each time point doesn’t work, because when they cross you end up with a box around the cross, rather the inside region being shaded.

I did think this would have been done before, but I couldn’t find anything that resuable. There was this blog post but like user Aniko mentioned in the comments, that was essentially finding the midpoints by hand, which seemed a bit clumsy (not saying the below is any better). Aniko’s solution used a package gpclib to create polygons for each block of colour, which was about where I got to when looking for a ggplot strategy. I played around a bit and couldn’t get the gpc.poly data to work with ggplot, so put together a couple of small functions to essentially do the same thing.

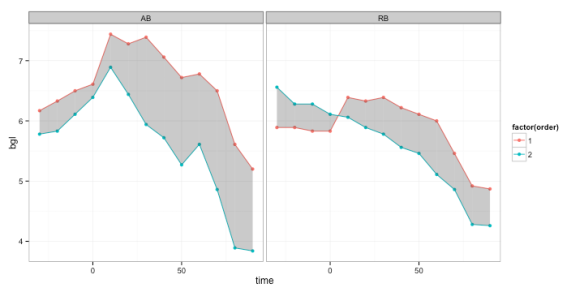

So here’s the code and output.

# load data

library(ggplot2)

library(RCurl)

library(gridExtra)

library(plyr)

theme_set(theme_bw())

dat <-read.csv(textConnection(getURL("https://raw.githubusercontent.com/nzcoops/datasets/master/shading_two_lines")))

h(dat)## id order time bgl

## 1 AB 1 -30 6.17

## 2 AB 1 -20 6.33

## 3 AB 1 -10 6.50

## 4 AB 1 0 6.61

## 5 AB 1 10 7.44

## 6 AB 1 20 7.28

# this block is run within each person essentially it creates a duplicate of

# all rows bar the first and last two and adds a grouping variable to the

# end that way every 4 rows are will be the coords for a polygon

mperson <-function(x) {

x <-x[order(x$time), ]

y <-x[-c(1, 2, nrow(x) -1, nrow(x)), ]

x <-rbind(x, y)

x <-x[order(x$time), ]

x$group <-rep(letters[1:(nrow(x)/4)], each = 4)

return(x)

}

dat2 <-ddply(dat, .(id), mperson)

h(dat2)## id order time bgl group

## 1 AB 1 -30 6.17 a

## 2 AB 2 -30 5.78 a

## 3 AB 1 -20 6.33 a

## 4 AB 2 -20 5.83 a

## 5 AB 1 -20 6.33 b

## 6 AB 2 -20 5.83 b

# this block is run within each person and 'block (group)' of 4 rows (each

# polygon) essentially this is to get the rows in the correct order, so that

# the geom_polygon function can work clockwise to construct the polygons the

# correct way

mgroup <-function(x) {

x <-x[order(x$bgl), ]

left <-x[x$time ==min(x$time), ]

right <-x[x$time ==max(x$time), ]

if (all(left$order ==right$order)) {

left <-left[order(left$bgl, decreasing = T), ]

right <-right[order(right$bgl, decreasing = F), ]

return(rbind(left, right))

} else {

return(x[order(x$time), ])

}

}

dat2 <-ddply(dat2, .(id, group), mgroup)

h(dat2)## id order time bgl group

## 1 AB 1 -30 6.17 a

## 2 AB 2 -30 5.78 a

## 3 AB 2 -20 5.83 a

## 4 AB 1 -20 6.33 a

## 5 AB 1 -20 6.33 b

## 6 AB 2 -20 5.83 bAnd here’s the plot

ggplot(dat, aes(x = time, y = bgl, group = order)) +geom_line(aes(colour = factor(order))) +

geom_point(aes(colour = factor(order))) +geom_polygon(data = dat2, aes(y = bgl,

group = group), alpha = 0.3) +facet_wrap(~id)I wrote this post in RStudio using the R Markdown language and then knitr to turn in into markdown (.md), and then pandoc to turn it into html. The original file is available here on github.

system(“pandoc -s shading_between_the_lines.md -o shading_between_the_lines.html”)

As an aside, the mgroup function might seem like overkill, but it was a bit tricky, as when the lines cross you have to be careful to get the right ‘hourglass’ orientation, either vertical or horizontal.

dat <-data.frame(x = c(10, 10, 20, 20), y = c(3, 4, 5, 2), order = c(1, 2,

1, 2))

a <-ggplot(dat, aes(x = x, y = y)) +geom_line(aes(group = order)) +geom_point(aes(group = order)) +

geom_polygon(aes(x = x, y = y), fill = "red", alpha = 0.2)

dat <-data.frame(x = c(10, 10, 20, 20), y = c(3, 4, 2, 5), order = c(1, 2,

1, 2))

b <-ggplot(dat, aes(x = x, y = y)) +geom_line(aes(group = order)) +geom_point(aes(group = order)) +

geom_polygon(aes(x = x, y = y), fill = "red", alpha = 0.2)

dat <-data.frame(x = c(10, 20, 10, 20), y = c(3, 4, 5, 2), order = c(1, 2,

2, 1))

c <-ggplot(dat, aes(x = x, y = y)) +geom_line(aes(group = order)) +geom_point(aes(group = order)) +

geom_polygon(aes(x = x, y = y), fill = "red", alpha = 0.2)grid.arrange(a, b, c, nrow = 1)